机器学习-总结

Contents

[TOC]

Ch1 Overview of ML

对ML的分类:

监督学习(Supervised learning) 其基本思想是给定数据集中的样本是带有”正确答案”的,学习这些数据之后,再来新样本时,可以做出预测. 常见的问题有垃圾邮件分类。

无监督学习(Unsupervised learning) 给定数据集没有标签。常用算法:聚类。常见问题:新闻分类、细分市场。

Application:

- Supervised learning:

- Linear Classifier

- Support Vector Machines (hard SVM, soft SVM)

- Kernel Methods

- Deep Learning

- Unsupervised learning:

- Linear Discriminant Analysis(LDA)

- Principle Component Analysis (PCA)

- Generative Models(e.g. GMM, K-means—clustering)

Supervised learning

一些参数以及符号:

Model

The objective of the supervised learning is to find the parameters that minimises the average loss:

Unsupervised learning

Learning patterns when no specific target output values are supplied.(实际上就是没有label)

Examples:

- Clustering: group data into groups

- Building probabilistic model to explain data

- Anomaly detection

Types of machine learning: shallow vs. deep (传统机器学习VS深度学习)

| Traditional machine learning | Deep learning |

|---|---|

| Important step: feature design | Allows raw input |

| Usually work with “feature vectors” | End-to-end learning |

| Mapping function is simple, with relatively small number of parameters | Complex models, with millions of parameters |

| Works well if the input can be captured by vectors, small to medium number of samples | Works well if the “right feature” is unknown or the input is complex and a large number of samples are available |

进行ML的流程:

- Problem formulation – What is the input? What is the expected outcome?(输入输出?)

- Data collection

- Collect data

- Annotation(给data以label)

- Design machine learning algorithm

- Choose the machine learning model

- Choose the objective function

- Training machine learning model: Learn the decision system from training data

- Applying machine learning model

数学基础

线性代数

I为单位阵。(E)

矩阵转置:

逆矩阵inverse:

矩阵的迹(也就是对角线上的元素相加,被称之为

两个向量的内积:

p-norm:

欧几里得范数(Frobenius norm):

特征向量和特征值:

矩阵的特征分解:

A: diagonal matrix,其中除了主对角线上的元素,其他位置上的元素都为零。其第i个对角值为A的第i个特征值。

Q: 每一列column是第i个特征值对应的特征向量。

如果A是对称的(symmetric)—对角阵天然对称:

其中Q满足了正交矩阵(Orthogonal Matrix)的性质

矩阵求导

首先

概率与统计

期望Expectations:

离散的情况:

连续的情况:

方差Variance:

贝叶斯、最大似然估计在下面有公式,此处不赘述。

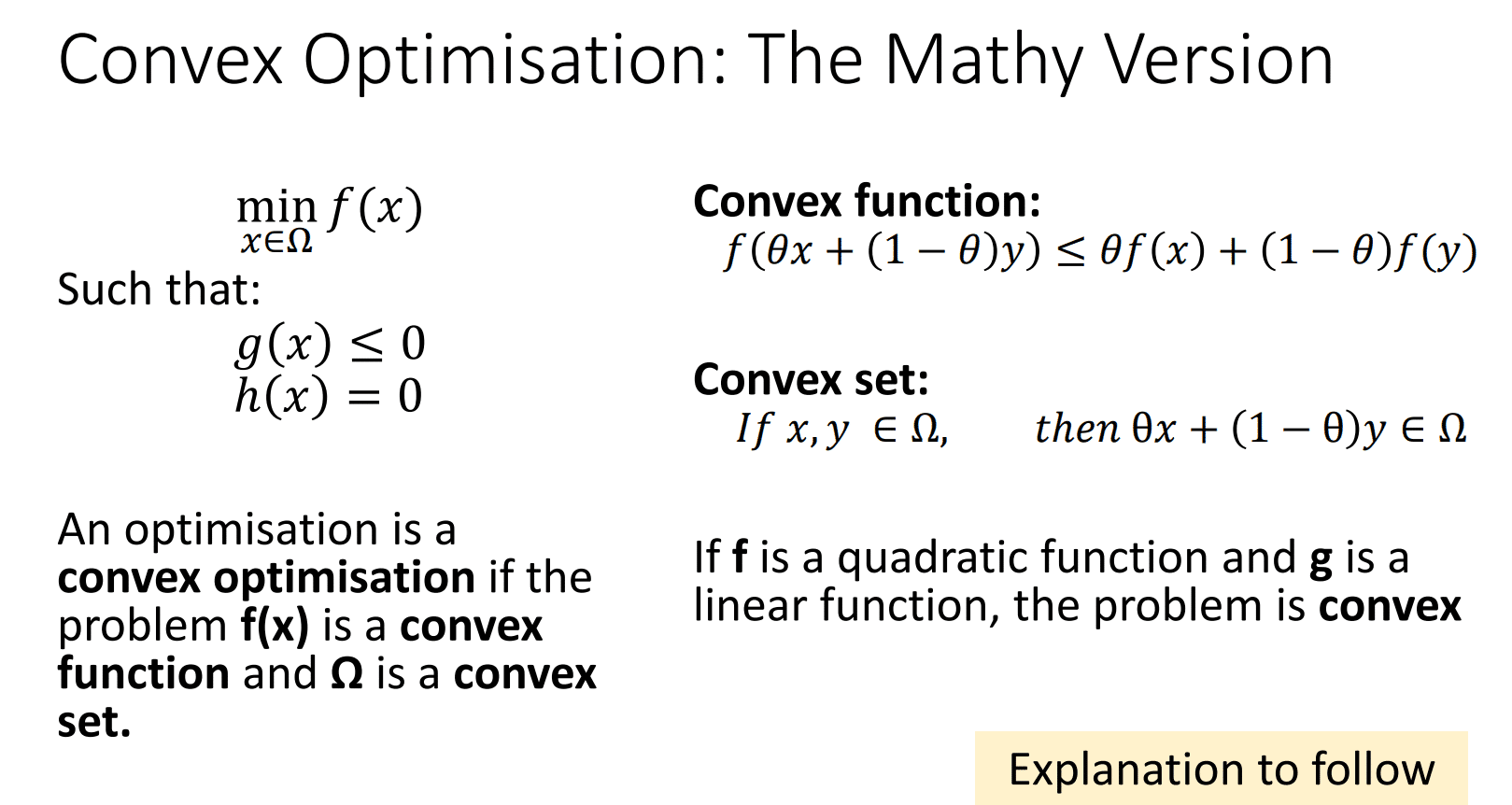

优化问题的种类

Type of optimization problems:

- Continuous vs. Discrete: binary or Integer variables

- Linear vs. Nonlinear

- Convex vs. nonconvex

对于凸优化问题(Convex Optimization)

其==Global optimum=Local optimum==.

证明属于convex function:

Ch2 Classification

classification与regression的对比:

| Classification | Regression |

|---|---|

| predict labels | predict values |

| categorical, often no order | deal with ordered values |

| all differences are equal | some differences are bigger than others |

classifier的一种划分:

- binary 二分类

- multi-class 多分类

- multi-label 多标签

classifier的划分以及应用:

instance-based(KNN): classifiers use observation directly(no underlying model)

K-Nearest neighbours just checks what the class of nearby training data are

generative(Naive Bayes): build a generative statistic model

Naïve Bayes attempts to model the underlying probability distributions of data

discriminative(Decision Tree): directly estimate a decision rule/boundary

Decision trees are concerned with establishing the boundaries between classes

欧式距离 Euclidean Distance:

马氏距离(估计不考)Mahalanobis Distance:

KNN

Test Error的计算:假设classifier是

当

值代表的是取周围的 个邻居。

- 当

值较小(例如 )时,模型的复杂度较高。每个测试样本只考虑其最近的一个邻居,这可能导致模型对噪声和局部变化非常敏感。在这种情况下,训练误差 很低,因为模型会完美地匹配训练样本,但测试误差 较高,因为模型在未见过的样本上可能无法泛化。 - 当

值较大时(例如 ),模型的复杂度较低。考虑更多的邻居可以平滑决策边界并减少噪声的影响。在这种情况下,训练误差 可能会增加,因为模型会更多地考虑其他类别的样本,测试误差 可能会减少,因为模型在未见过的样本上更具泛化能力。 - 当

值等于样本数量时(例如 ,其中 是样本数量),相当于使用全部训练数据作为邻居。在这种情况下,模型的复杂度较低,因为它会考虑所有训练样本的投票。训练误差 可能会增加,因为模型更加保守。测试误差 可能会减少,因为模型使用了更多的信息。

KNN is a type of lazy learning(实际上并没有train的过程)

- Lazy: learning only occurs when you see the test example

- Eager: learn a model prior to seeing the test example

KNN的好处与坏处(评价):

| Advantages | Disadvantages |

|---|---|

| Easy to understand | Hard to find a good distance measure |

| Very flexible decision boundaries | Irrelevant features and noise reduce performance significantly |

| No learning required | Cannot handle more than a few dozen attributes (Curse of Dimensionality) |

| Computationally expensive |

overfitting与underfitting

| overfitting | underfitting | |

|---|---|---|

| 特征 | Model could fit any data(trainset上结果很好), also fit the noise(testset上的结果很烂) | Model is too inflexible to match the data, too few learnable parameters, wrong learnable parameters(在trainset和testset上表现都很烂,根本没训练好) |

| 解决方法solution | Less complex model, More data, Regularisation (soft reduction in parameters) | Better data/pre-processing, More complex model, Less regularisation |

Validation set

在机器学习中,我们通常将数据集划分为训练集(Training Set)、验证集和测试集(Test Set)。验证集(Validation Set)是用于模型选择和超参数调优的一个独立数据集。

训练集: 用于模型的参数估计和训练过程

测试集:用于评估最终模型的性能

验证集:用于选择不同模型和调整超参数的过程

Cross validation: 其中的Leave-one-out cross-validation。将数据集分为多个子集,拿一个作为validation。

Naive Bayes

一些背景知识:

Bayes的limitations:需要有data,无data不工作(基于数据的统计,生成概率)。

Bayes公式:

如果说是独立的:

极大似然估计:

Naive Bayes:将Bayes Theorem应用于有着先验(Prior)概率或者可能性的数据集中。

Naive的来由:数据属性之间的相关性,我们假设是独立的(assume independence)。

Multinomial Naïve Bayes:

将极大似然估计(Maximum likelihood, 假设数据是从某个参数化的概率分布中生成的,我们要找到使得给定数据的概率最大化的参数值,用偏导计算 )应用在Naive Bayes中:

而这样不好计算,于是变成对数函数:

: 其中 代表其中的参数。

Application

Bag of words(词袋)

- X:words

- Y:是否是垃圾邮件(spam)—二分类问题

举例:“the most interesting film this summer”

数学原理:

- P(d|c):这表示在给定类别c的条件下,文档d出现的概率。它是通过假设文档中的每个词(

)在给定类别c的条件下是独立的,并且通过对这些条件概率的乘积来计算的。具体而言,这个公式使用了一个位置集合(positions),表示文档中被考虑的词的位置,然后计算每个位置上词 在给定类别c的条件下的概率 ,并将它们相乘得到 。 :这表示通过贝叶斯决策规则(Bayes’ decision rule)选择的最有可能的类别。在这个公式中,我们通过计算每个类别c的后验概率 来选择最有可能的类别。根据贝叶斯定理, 可以通过 计算得到。 :这表示类别c在整个数据集中出现的概率,也称为类别的先验概率。它可以通过计算在训练数据中类别c的频率来估计。

接下来要如何计算呢?还是一样的,

Decision Tree

一个决策的过程,我们需要确定:

- Which variable to test

- What to test it against

- What function to apply to a variable prior to a test

决策树模拟了一个树形结构,其中每个内部节点表示对一个特征的测试,每个分支代表测试的结果,每个叶节点代表一个类别或回归值。它的构建过程从根节点开始,选择最佳的特征进行测试。选择的依据可以是信息增益(Information Gain:

- 分类问题中:决策树的叶节点表示一个类别,通过在树上从根节点到叶节点的路径,决策树可以对新的样本进行分类。

- 回归问题中:叶节点表示一个预测值,通过在树上从根节点到叶节点的路径,决策树可以对新的样本进行回归预测。

Ch3 Regression

对regression与classification的区分:

classification中Y是离散变量,regression中Y是连续变量。

Application

- 房价预测

- 图像处理(Image processing)

- 人数统计(Crowd counting)

- 生成声音(Generate sounding)

Linear Regression

线性回归:给定一些样本点,找出一条可以拟合的曲线。

计算

上述的

不能用Regression去Classification的理由:

Limitation:

– Put unnecessary requirements to the predicted output

– May increase the fitting difficulty and lead to bad training result

But why is it commonly used in practice?

– Close-form solution, less storage for low

-dimensional data

– Quick update for incremental learning, distributed learning

Regularized Regression

即添加了正则化惩罚项regularization的Linear Regression。

regularization的用途?

- 避免过拟合 avoid overfitting

- Enforce certain property of solution

其形式:

对p-范式的介绍(p-Norm):

当p=1的时候:Lasso Regression

当p=2的时候:Ridge Regression

Ridge Regression

其Loss function的形式是:

对其求解:

如果

Lasso Regression

其Loss Function的形式是:

- L1 norm encourages sparse solution. This could be useful for understanding the impact of various factors, e.g. perform feature selection.

- Sometimes it can lead to an improved performance since it can suppressing noisy factors. 其原因是:计算出来

。使得某些特征的 可以取0。 - Unfortunately, it does not have a close-form solution.

Support Vector Regression

Key idea: if the fitting error is already small enough, do not make it smaller.

SVR通过在二维空间中找到一个最优超平面来实现对回归过程的建模。由于这个最优超平面仅考虑到了在训练集周围边缘的点,使得模型对数据点的过拟合现象进行有效地避免。同时,根据再投影误差作为惩罚项的复杂度控制参数可以很好地调节回归模型的灵活性。

硬间隔(Hard-margin):

软间隔(Soft-margin):

SVR:

如果

Dual form of SVR

原始问题:

对偶问题(dual problem):

Ch4&5 Linear Classification and SVM

属于statistical ML。

Primal Problem VS Dual Problem: 原始问题is hard。对偶问题的解恰好对应着原始问题的解,因此只要能解决对偶问题,就意味着我们解出了原始问题。

Linear Classifiers

在线性的分类器中处理分类,就是计算特征的线性组合:

如果是二分类问题:结果大于0,属于class 1,结果小于0,属于class 2。

Convex Theorem

==用于证明一个function是否是convex的==

如果一个函数是凸函数,那么它将满足:

SVM

目标:find a hyperplane

Hard-margin

因此我们一开始的goal function是:

该形式可以转化成:

该形式符合凸优化理论,可以使用拉格朗日乘子法解决问题(Lagrangian Dual Problem)

对

: is a weighted sum of the input vectors ( ) - 很多情况下

: because this point doesn’t contribute to the margin

再把

因为:

Soft-margin

其goal function:

对于参数

- Low C: we don’t pay anything for these violations (width is king)

- High C: we pay a lot for violations (no violations is king)

突然在PPT中介绍了一下hinge loss——

Hinge Loss: 一种常用的损失函数。Hinge Loss用于衡量样本的分类错误和分类边界的间隔。其在soft-margin中的定义如下:

: 表示样本的真实标签(通常为-1或1)

: 表示样本的预测分类(即决策函数输出的值)。 Hinge Loss的目标是使正确分类的样本的损失为0,并增大错误分类样本的损失。在软间隔分类中,Hinge Loss通常与正则化项结合使用,以平衡分类错误和模型复杂度。通过最小化Hinge Loss和正则化项,可以得到一个具有较小间隔违规和较小模型复杂度的分类模型,从而在训练集上和测试集上获得良好的性能。

接下来继续我们soft-margin的求解部分:

求解偏导,因为只是加了其他项,所以

其他的

KKT条件:

对比一下hard-margin以及soft-margin的拉格朗日函数:

我们容易发现:

对于soft-margin将

因为:

你会发现soft-margin计算出来的

因此对偶问题是:

我们的原问题是:

怎么从对偶问题转化为原问题呢?

对于

- Find a

vector - Thus,

- Thus,

在soft margin的情况下,由于存在一些样本落在间隔边界内部,因此选择多个支持向量计算偏置b可能更合适。一种常见的做法是选择所有满足

Ch6 PCA, LDA and Dimensionality reduction

Dimensionality reduction

其基本原理是:Preserve “useful” information in low dimensional data.

其常用的方法:PCA,LDA

reasons:

- Extract underlying factors

- Reduce data noise

- Face recognition

- Applied to image de-noising

- Reduce the number of model parameters

- Avoid over-fitting

- Reduce computational cost

- Visualization

PCA(Principal Component Analysis)

==无监督学习方法。==

PCA:

- Transform data to remove redundant information

- Keep the most informative dimensions after the transformation

其步骤:

去中心化(De-correlating data): Correlation can be removed by rotating the data point or coordinate

计算协方差矩阵,找特征向量与特征值(Eigen decomposition)

此处的

是单位矩阵,A是对称矩阵,式2式正交矩阵Q的性质, 是协方差矩阵。

英文版:

- Subtract mean

- Calculate the covariance matrix

- Calculate eigenvectors and eigenvalues of the covariance matrix

- Rank eigenvectors by its corresponding eigenvalues

- Obtain P with its row vectors corresponding to the top k eigenvectors

其数学原理:

因此原始的协方差矩阵为:

我们想找到一个矩阵P,可以对数据去中心化:

有因为:

对

令

High dimensionality issue(Kernel PCA)

- Centralize data

- Calculate the kernel matrix

- Perform Eigen-decomposition on the kernel matrix and obtain its eigenvector

- Obtain the Eigenvector of the covariance matrix by

LDA(Linear Discriminative Analysis)

有监督学习:利用了样本的类别标签信息来进行模型训练和分类。

Supervised information :

- Class label

- Data from the same class => Become close

- Data from different classes => far from each other

LDA假设数据满足高斯分布,并且根据类别信息进行有监督训练。它的目标是通过最大化不同类别之间的距离(类间散度)和最小化同一类别内部的方差(类内散度),来实现在新的低维空间中使得不同类别更好地可分的投影。

将数据投影到低维空间,其新的均值以及方差如下:

类内(between)以及类间(within)散度:

其目标函数是:

将上述形式化为拉格朗日对偶问题的形式:

对其求偏导得:

得到的形式刚好是求解特征向量的标准形式(

At optimum, we have $p^{^T}S_bp^=\lambda$

表示在最优条件下,投影向量

这个方程用于确定最佳的投影向量

如果

如果LDA且Multi-class:

在

Ch7 Clustering

Unsupervised learning

Learning without supervision

- Find the distribution of data

- Learning to generate samples

- Clustering

- Anomaly detection

- Feature learning

Clustering

- One of the most important unsupervised learning tasks

- Clustering is the process of identifying groups, or clusters, of data points in a (usually) multidimensional space.

- Connection to distribution modeling: related to mixture model

Clustering: discover groups such that samples within a group are more similar to each other than samples across groups.

Clustering的Application:

- Segmentation

- Superpixel

- Vector quantization in Bag-of-feature model

Clustering的Ingredient:

- A dissimilarity function between samples

- A loss function to evaluate clusters

- Algorithm that optimizes this loss function

衡量dissimilarity function:

Clustering is invariant to rotation and translation of features, but not to scaling.

K-means Clustering

Basic idea:

- Each sample can only fall into one of the k groups

- From each group, we can calculate the mean vectors, that is, the average of samples falling into a group

- Any sample should be closest to the mean vector of its own group than the mean vectors of other groups

K-means take an iterative approach for solving the problem.

Algorithm:

- E-step: fixed the current mean vectors of each group. If a sample is closest to the i-th mean vector, then the sample is assigned to the i-th group

- M-step: fixed the assignment, calculate the mean vector of each group

- Iterate E step and M step, until converge

Questions:

Why using those two steps can lead to converged result?

通过反复迭代E-steps和M-steps, K-means算法会逐渐优化簇分配和质心的位置,直到达到收敛状态。在收敛状态下,簇分配不再发生变化,质心的位置也相对稳定。这意味着算法已经找到了一个相对稳定的聚类结果,达到了收敛状态。

Why always calculate mean vectors?

为了更新簇的位置,并在每次迭代中重新计算数据点与质心之间的距离,从而进行簇分配。

What is the objective function of k-means clustering algorithm?

– Objective function will give a measurement of the goodness of clustering results

: Each point will be assigned to one of the prototypes, the distance between the point to the prototype is the cost of the assignment. We are seeking the optimal assignment that can minimize the cost.

: We are also seeking the optimal prototypes that can minimize the total cost.

Choose K? Cross validation

Limitations of k-means

- Hard assignments of data points to clusters can cause a small perturbation to a data point to flip it to another cluster

- Assumes spherical clusters and equal probabilities for each cluster

- Those limitations can be solved by GMM based clustering

Gaussian Mixture Models(GMM)

混合高斯模型:GMM can characterize the distribution that contains K clusters. GMM假设观察的数据点是从多个高斯分布的混合中生成的,每一个高斯分布有一个

Generative process:

Randomly select the k-th Gaussian distribution according to

Randomly sample x from the selected Gaussian

distribution

Latent Variable:

- Intermediate results inside a generative process

- Each sample is associated with a latent variable

- We do not know its exact value

- But we can infer how likely it can be

对于隐变量,我们无法直接观察或测量它,但是它对最终的结果有影响。只能推测其可能性。

比如学生的学习能力,是latent variable,会对考试结果产生影响,但是我们不能确切地衡量学生地学习能力。

parameter estimation for GMM: use MLE(最大似然估计),然后求解偏导

在GMM中,估计模型参数通常采用期望最大化算法(Expectation-Maximization,EM算法)。EM算法通过迭代的方式,交替进行E步和M步。

EM algorithm solution to GMM

E-step: calculate the posterior probability about the latent variable (for each

calc prob for each cluster, this corresponds to assigning to a cluster in k-means)—根据当前的模型参数,计算每个数据点属于每个成分的后验概率(即给定数据点的观测值和模型参数,该数据点属于每个成分的概率) M-step: estimate parameters based the expectation of latent variables (recalc GMM based on estimate in step E, in k-means this is recalc the centroids)—使用这些后验概率更新模型参数,包括成分的均值、协方差矩阵和每个成分的权重。

EM algorithm (More general case)

- The above solution represents a special case of a more general method called EM-algorithm

- It is widely used for learning the probabilistic models with latent variable inside its generative process.

- It iterates between an E-step and an M-step.

- We can theoretically prove that each step will lead to a nonincrease of loss function

- The iterative process will converge to a local minimum

其通用形式如下:

E-step:计算隐变量的后验概率:

, 是目前模型的参数 M-step:基于期望的似然估计更新模型参数

与K-means 的 connection:

- E-step in GMM is a soft version of K-means.

, instead of . - M-step in GMM estimates the probabilities and the covariance matrix of each cluster in addition to the means.

- All

are equal. , as , and the 2 methods coincide.

Ch8 Kernel Method

Kernel function的性质(证明Mercer定理):

- positive semi-definite(半正定):

Mercer定理:如果K(x, y)满足一定的条件,那么它可以表示为一个内积空间中的正定核函数,即存在一个特征空间(称为希尔伯特空间),以及一个映射ϕ(x)将数据映射到该空间中,使得K(x, y)可以表示为内积的形式。

如果对于任意的n个实数

和Ω中的n个点 ,矩阵 是对称的,并且对于任意非零的向量 ,有 ,那么K(x, y)是一个半正定核函数。

Polynomial Kernel

多次项核函数,比较常用。其形式如下:

Radial Basis Function Kernel(高斯核)

更常用的核函数,其形式如下:

Kernel in SVM

我们原始的对偶问题是:

新的对偶问题实际上是把

我们选定核函数:原先的升维函数不宜求解,但核函数可以求解

- We stop caring about what the transform represents

- We start caring about how well it classifies our data

其他详细地推导没有,主要是在CVX中的应用。

Kernel in K-means

Map the data space into some kernel space.

Hope this new space better allows the data to be separated!

下面是K-means的过程:

1.Assigning Points to Clusters

(原来的)

(将

2.Defining Cluster Mid-Points

(原来的)

(将

Kernel in Linear Regression

原来的:

现在的:

我们令

o: 映射函数φ(x)可能带入的一个额外 bias term

:

- o不代表任何一个实际样本点。它仅仅表示引入核技巧可能带来的一个额外的bias项。

- 因此,任何样本点

与o的距离 都是无定义的。无法直接计算核函数值。 - 因此就直接定义=0

Kernel in PCA

| Tasks | Problems |

|---|---|

| Replace x with |

Centralising the data |

| Turn everything into dot products | Calculating the covariance matrix |

| Use |

Calculating the projection matrix |

| Never use |

计算协方差矩阵:

: centralised data matrix(d x n) d: 属性的维数,n:有多少样本

:(d x d)协方差矩阵

: 协方差矩阵的特征向量

经过kernel matrix:

计算去中心化:

here represents a NxN matrix filled with 1/N

计算投影矩阵:原来是

带入

Ch9 Ensemble Method

When to use?

- Individual decisions are cheap, so making many of them is easy

- Making an expert is expensive or impossible

- Your amateurs are independent of each other

Decision Tree

Entropy=

Gini Coefficient of Purity是利用Gini不均衡度来度量分类问题样本集合的纯程度。它服判定模型预测性能的一个指标(衡量决策树每个节点的纯度):

- 它通过计算样本集合中的类分布不均衡程度,来反映纯度程度。

- 如果样本全是同一类,类分布最均衡,Gini值为0,这也就是100%的纯度。

- 否则不同类样本混杂,Gini值越高,表示纯度越低。

The Flaw of the Decision Tree:

- We have a series of decisions, but some of these decisions can only be conceived of as overfitting. (根据训练数据集递归地构建依赖于数据的决策边界,不断地细分训练集样本)

- There are numerous places we could place decision boundaries. (会导致决策边界过于复杂,训练集效果不错,但是测试集的效果很差)

- How can we avoid these overfit decision boundaries?—比如剪枝,或限定最大树深

Random Forest

定义:A random forest is a series of decision trees which are randomized. You then apply your new measurement to each decision tree, picking the aggregate decision by voting.

容易出现的问题:

- Some potential decision boundaries represent overfitting.

- Some features are not representative of generalisation.

我们使用bootstrapping方法(随机有放回地采样—random selection with replacement)得到子数据集(subsets)

值得考虑地问题是:

How many trees?

Too few trees:

- Might interpret random variation as signal.

- Biased towards individual trees.

Too many trees: Slow?

How many dimensions/tree? —从实践来看一般是

Bagging doesn’t choose a random subset of dimensions

- Bagging算法为了减轻过拟合,其主要思想是从原始训练数据集中采样出多个并行的子数据集,来训练多个基学习器模型。(采样过程使用的是boostrapping方法)

- 但在采样子数据集时,Bagging采样的是样本实例,而不是特征维度。

- 也就是说,每个子数据集中包含的是原始所有特征维度对应的一部分样本。而不是随机选择一部分特征维度。

Boosting

idea: We build a classifier, but it incorrectly classifies some samples, we can further train this classifier to perform better on the incorrectly labelled samples(从错误中学习)

- We make decisions based on a weighted decision of minimal ‘weak classifiers’ (stumps)

- After we have built our ‘one stump’ we can judge how good it is, by the number of instances it gets wrong.

Adaboost

目标函数:

loss function:

还有其他的boost的方法,比如XGboost,Gradient Boost。

添加weak learner

第

因为

对

What do we have in common?

- We are weighting our bad decisions higher (i.e., boosting)

- We are using ensemble learning

Ch10 Deep Learning

关于Loss的介绍:

- Loss is some measure of the error between the predicted and target values

- A metric for determining how well a model has trained

- Lower loss/cost/error => better model => better learned parameters

我们常用的Loss是MSE(Mean Squared Error):

全局最小值以及局部最小值:Global Minimum is the smallest error out of all possible parameter configurations, Local Minimum is the smallest error in some region of space.

一般使用梯度下降(Gradient Descent)来更新参数:

Learning Rate(

Choice of learning rate is very important:

- Too small… takes forever to train, can easily get stuck in tiny local minima

- Too big… can overstep or become unstable

Activation Function:常用的有sigmoid,relu,Tanh函数

Softmax:将结果转化为概率分布

Neural Networks

Terms:

- Iterations (one per ‘batch)

- Epochs (one per ‘all samples’)

- Batches(对于图片来说就是mega-pixels)

CNN

其步骤如下:

1.卷积层(Convolutional Layer)—利用padding补全

- 使用卷积操作提取图像中的特征,如边缘、色彩等。

- 参数包括过滤器(kernel)和偏置。

2.池化层(Pooling Layer)

- 对特征图区域进行下采样,降低空间维度,增加翻转不变性和控制过拟合。

- 常用最大池化和平均池化。

3.激活层(Activation Layer)

- 为卷积层和全连接层添加非线性机能,如ReLU、Sigmoid、Tanh等。

- 全连接层(Fully Connected Layer)

- 将上一层特征整合成一维数组,与该层权值相乘实现分类或回归功能。

4.Softmax层

- 将全连接层输出进行Softmax归一化,输出概率评分实现分类。

考点整理

cross-validation:

我们学习的不同方法是属于有监督学习还是无监督学习?

LDA,PCA,GMM,SVR等等

instance/generative/discriminative的区别以及应用

SVM的公式推导,各个概念的定义:margin,soft/hard-margin,dual/primal problem

集成学习中的概念、回归模型的特点

K-means,GMM概念

PCA的概念以及过程,LDA概念,核函数的证明

最后有关CNN的计算:整体的过程以及参数的个数